Blog -

Using Slack to auto-deploy templatized software projects

Here at FloQast, we use a combination of GitHub, Jenkins, Terraform, and AWS for maintaining, deploying, building, and housing our infrastructure and software applications. One of the projects we are developing is a collection of React applications that is stored in an S3 bucket sitting behind a Cloudfront Content Delivery Network. Since all of these React applications basically start life with a common set of attributes, we thought: “What if we could create a new React application without a developer needing to know about the infrastructure or our CI/CD pipeline Moreover, what if they could do this all from within Slack?” With this question, our GRAIL project was formed.

The goal of this project is to be able to quickly create new GitHub repositories, based off of a set of templates, and do so in an automated fashion. Our developers will then be able to iterate quicker without needing to worry about setting up any backend infrastructure. With a bit of backronyming we settled on “(G)itHub (R)epository (A)utomation to (I)ncrease (L)egerity” or GRAIL.

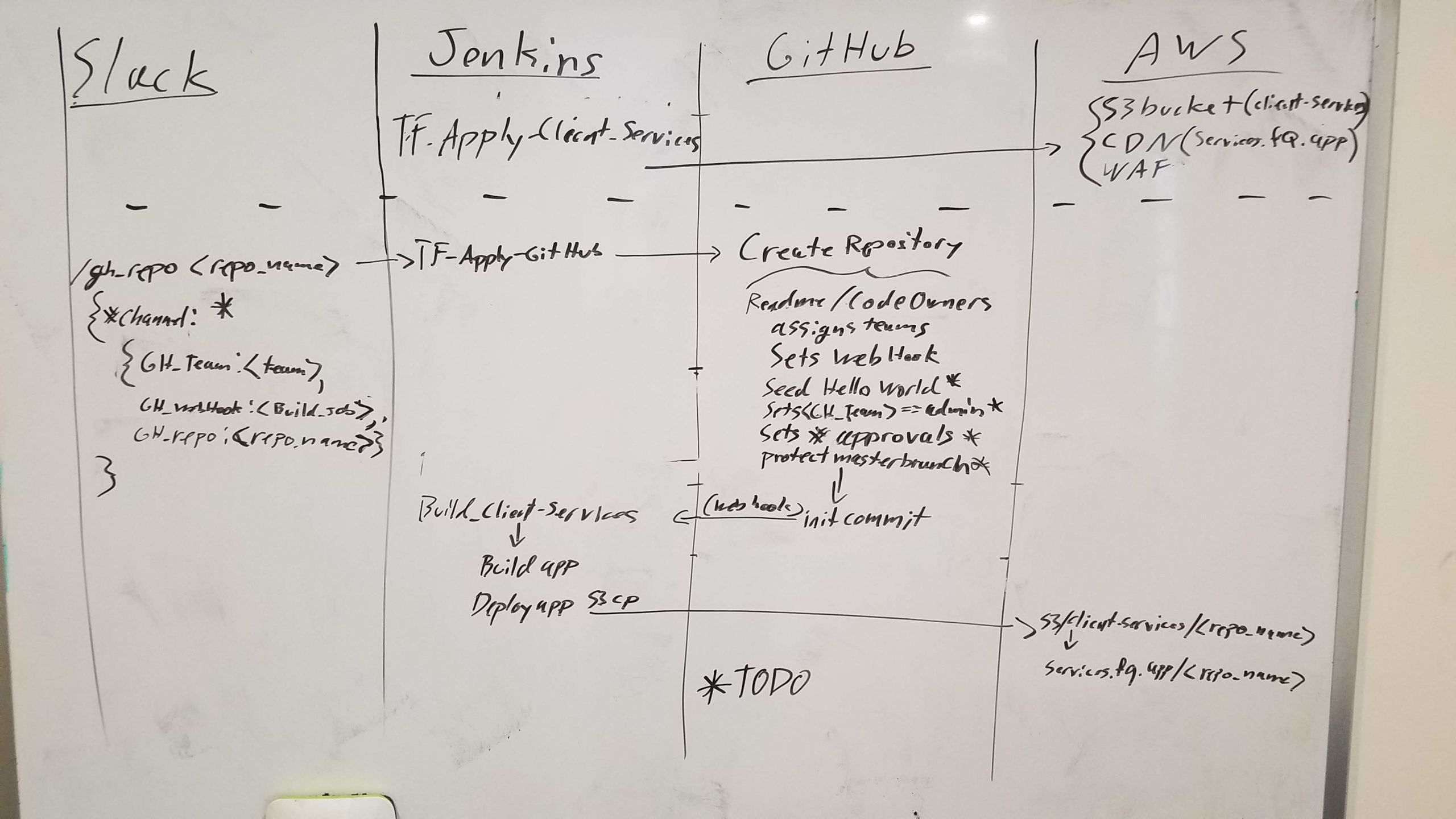

As with most projects, GRAIL started life as a white boarded sequence diagram:

Grail Whiteboard

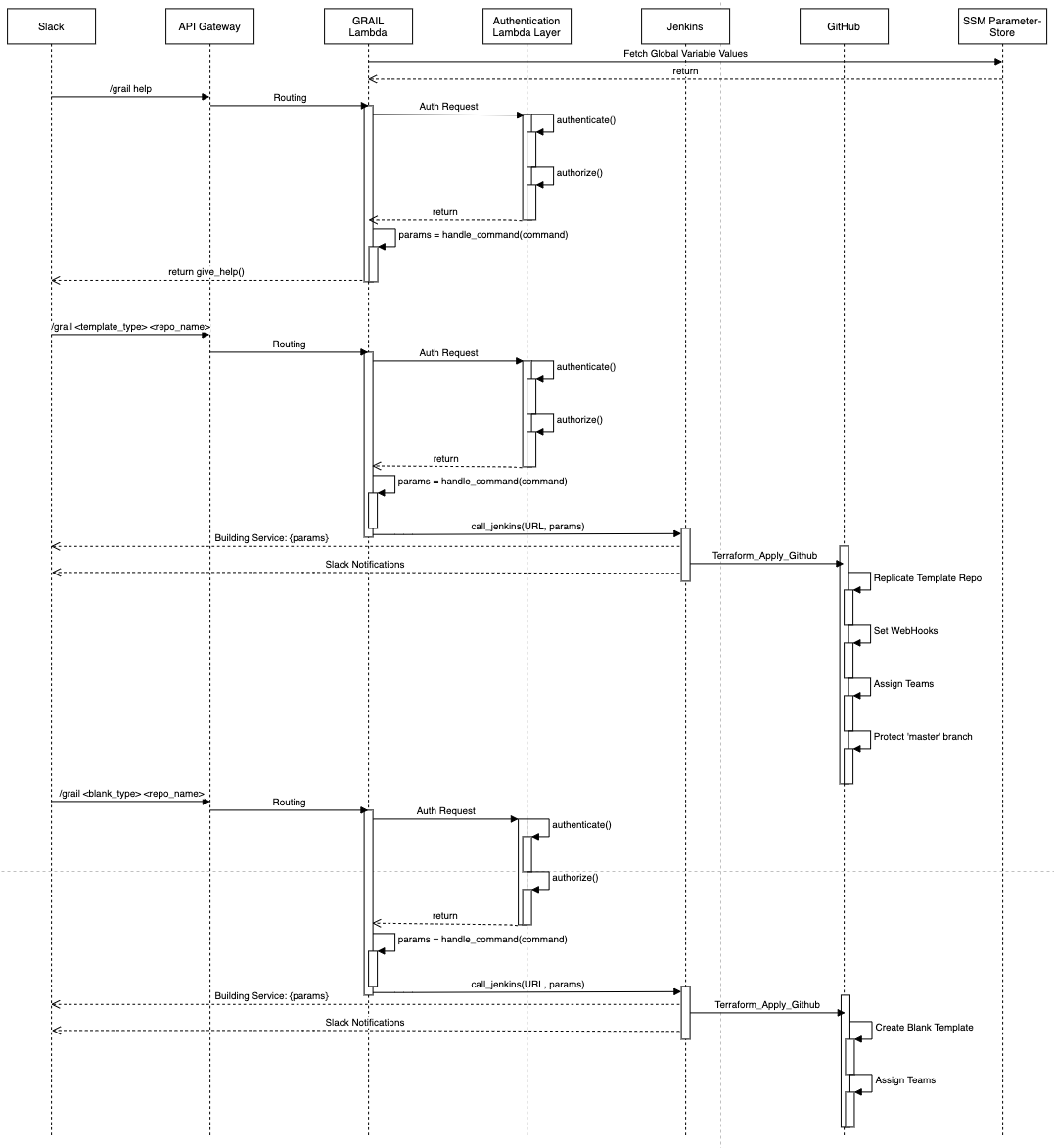

Eventually, the sequence diagram ended up looking like:

GRAIL Sequence Diagram

GitHub Terraform

At the base of our stack is a set of Terraform scripts that is set up to run against the GitHub Terraform Provider. This workflow can also be set up independently of the forthcoming Slack slash command and can be triggered by any number of methods. Here at FloQast we have chosen to use Jenkins Pipeline jobs for applying our Terraform.

We have two classes of GitHub repositories that we can create with this job; Template Repositories or Blank Repositories.

Template Repositories

For each of our standard projects we started by making a GitHub Template Repository. This template repository contains the minimum code, or scaffolding, required to create a basic application. This can be a React js application, a simple Lambda, or even a system for housing multiple Lambdas. Our Terraform then creates a copy of this repository by using the Template parameter when creating a new repository.

# Create Templatized GitHub Repository

resource "github_repository" "template_repo" {

name = local.repository_name

description = "Repository for ${var.repository_name}"

private = true

template {

owner = var.github_organization

repository = var.template_repository

}

count = var.templatized_repo == "template" ? 1 : 0

}

After the scaffolded repository has been created, we add branch protection to the ‘master’ branch. We enforce status checks for repo admins, dismiss stale reviews, require code owner reviews, a minimum of two reviews, and enforce that only our QE team can merge into ‘master.’

resource "github_branch_protection" "branch_protection" {

repository = github_repository.template_repo[count.index].name

branch = "master"

enforce_admins = true

required_pull_request_reviews {

dismiss_stale_reviews = true

require_code_owner_reviews = true

required_approving_review_count = 2

}

depends_on = [

github_team_repository.qe_production

]

restrictions {

teams = [

data.github_team.qe_production.slug

]

}

count = var.templatized_repo == "template" ? 1 : 0

}

Next, we add GitHub Webhooks to trigger Validation and Build jobs in our Jenkins server. More to come on this below.

# Add Webhooks to the repository

resource "github_repository_webhook" "jenkins_push" {

repository = github_repository.template_repo[count.index].name

configuration {

url = local.webhook_url

content_type = "form"

insecure_ssl = false

}

events = ["push"]

count = var.templatized_repo == "template" ? 1 : 0

}

Blank Repositories

Sometimes we just want a blank repository so we allow this as well.

# Create Bare Bones GitHub Repository

resource "github_repository" "stock_repo" {

name = local.repository_name

description = "Repository for ${var.repository_name}"

private = true

auto_init = true

count = var.templatized_repo == "stock" ? 1 : 0

}

To switch between the two types of repositories we pass a variable called `templatized_repo` which is defined in our Jenkins job:

stage('Set Template Parameters') {

steps {

script {

env.TEMPLATIZED_REPO = 'template'

if (params.TEMPLATE_REPO == 'template-client') {

env.BUILD_JOB = 'Validate_client'

} else if (params.TEMPLATE_REPO == 'custom') {

env.TEMPLATIZED_REPO = 'stock'

env.BUILD_JOB = 'notapplicable'

}

}

}

}

This variable, along with several others, is then passed into our Terraform command:

terraform plan -refresh=true -input=false

-var=repository_name=${params.REPO_NAME}

-var=jenkins_credentials=${JENKINS_CREDENTIALS}

-var=build_job=${BUILD_JOB}

-var=template_repository=${params.TEMPLATE_REPO}

-var=templatized_repo=${TEMPLATIZED_REPO}

-out=tfplan.plan-${ENVIRONMENT}-${BUILD_NUMBER}

GitHub Teams

Regardless of the type of GitHub repository being made, we will then add our GitHub Teams to the Repository with ‘push’ (ie: ‘write’) permissions.

# Add teams to repository

resource "github_team_repository" "engineering_repo" {

team_id = data.github_team.engineering.id

repository = data.github_repository.repo.name

permission = "push"

}

Jenkins

To get the above to work within a Jenkins environment, there are a couple of credentials that need to get set up. There are multiple ways to create credentials within Jenkins, at FloQast our preferred method is to use the Jenkins Configuration as Code plugin in conjunction with the Configuration as Code AWS SSM plugin.

The first required credential is a GitHub token that has the ‘repo’ scope attached to it. This credential is stored as Kind: Secret text which we’ve named github_token. Per the Terraform documentation this credential is loaded as an environment variable and passed to the Terraform Plan and Apply stages of the associated Jenkins Pipeline file.

withCredentials([string(credentialsId: 'github_token', variable: 'GITHUB_TOKEN')]) {

terraformApply()

}

At FloQast, we use GitHub webhooks for triggering deployment jobs whenever code is either pushed or merged. Since the webhooks require a username and token to get through our Okta authentication we need to pass this as a secure string to our Terraform code. This credential is stored as Kind: Username with password which we’ve named github_webhook_id. In this case, the username is the company email of a service account and the password is an API token attached to that user’s Jenkins account.

This credential is then imported into our Jenkins pipeline as an Environment variable:

environment {

JENKINS_CREDENTIALS = credentials('github_webhook_id')

}

The credential can then be used as a variable when it is passed to Terraform:

terraform plan -refresh=true -input=false

-var=jenkins_credentials=${JENKINS_CREDENTIALS}

...

-out=tfplan.plan-${ENVIRONMENT}-${BUILD_NUMBER}

Our Terraform then creates a local variable webhook_url which is passed to the github_repository_webhook resource mentioned above:

locals {

webhook_url = "https://${var.jenkins_credentials}@${var.alb_dns}/job/${var.build_job}/buildWithParameters"

}

The final step is to have a Jenkins Pipeline file which consumes the credentials, any additional variables (such as the repository name), and then runs the Terraform steps.

The GRAIL AWS Lambda

There are four main components to the GRAIL project; Terraform for creating the resources and uploading the AWS Lambda source code, an AuthN/AuthZ lambda layer, the Lambda function source code for handling the logic, and a Jenkins Pipeline job for deploying the Terraform to AWS.

Terraform

There are multiple parts to the Terraform, but the main pieces are creating SSM Parameter-Store placeholders, creating an API Gateway, and packaging and uploading the Lambda src directory.

Using the Terraform aws_ssm_parameter resource we create placeholder values for any values which are secret or may be different between our production and our development environments. These values will be loaded into our Lambda at run time so they are available to our function whenever the Slack command is called.

Here is an example of one such resource:

resource "aws_ssm_parameter" "slack_signing_secret" {

name = "/slack/ops/signingsecret"

type = "SecureString"

value = "terraform"

description = "Slack Integration Secret"

overwrite = true // Needed for the lifecycle change

lifecycle {

ignore_changes = ["value"] //Don't overwrite our manually entered value

}

}

The parameters that we set are as follows:

- slack_signing_secret: Used to authenticate the request came from Slack

- slack_authorized_channel: Channel ID for any Slack channels authorized to run the Slack command, entered as json value, ie: { “channel-name”: “CHANNELID” }

- jenkins_username: Username for a Jenkins User with access to run the required jobs

- jenkins_user_token: Jenkins API token used to authenticate with Jenkins Okta integration for the above jenkins_username

- jenkins_job_token: Job token for use with the build-token-root Jenkins Plugin

- jenkins_environment: We run multiple Jenkins Master instances; one for development, and one for production. The Agents attached to one of these Masters does not have access to the other Master’s environment. This variable is used as a job parameter to tell the Agent which environment to deploy against.

- jenkins_protocol: ‘http’ or ‘https’, we recommend this value is set as ‘https’

- jenkins_internal_url: DNS entry for the Jenkins instance

- jenkins_job_path: The path to the Jenkins job as described by the build-token-root plugin, ie: myFolder/myjob

We set up an API Gateway Rest API as the entry point to our Lambda. This API Gateway acts as a proxy and will forward all requests directed at that API endpoint to our GRAIL lambda. The API Gateway is currently deployed with lambda integration with proxy as the integration type. This allows for faster turnaround of code without having to worry about the complexities of configuring the API Gateway with code revisions (e.g, new endpoints, new/modified/deleted methods).

For deploying the Lambda code itself, we use the archive_file data source to create a zip file containing the project code.

data "archive_file" "lambda_zip" {

type = "zip"

output_path = "${local.archive_path}"

source_dir = "../src/slack-jenkins-auth"

}

We then use the aws_lambda_function resource to create the Lambda, give it access to the Jenkins VPC, and upload the source code.

resource "aws_lambda_function" "lambda_function" {

function_name = "${var.function_name}"

filename = "${local.archive_path}"

source_code_hash = "${data.archive_file.lambda_zip.output_base64sha256}"

handler = "lambda_function.lambda_handler"

runtime = "${var.runtime}"

role = "${aws_iam_role.lambda_role.arn}"

vpc_config {

security_group_ids = ["${data.aws_security_group.jenkins_master.id}"]

subnet_ids = ["${data.aws_subnet.ops_private_subnet_a.id}"]

}

}

In addition to this, we use an IAM module to attach a Lambda role with the AWSLambdaVPCAccessExecutionRole AWS policy and an additional custom policy granting ssm:GetParameter for the required SSM resources.

resource "aws_iam_role" "lambda_role" {

name = "${var.function_name}"

path = "/service-role/"

assume_role_policy = "${data.template_file.lambda-roles-file.rendered}"

}

resource "aws_iam_role_policy" "ssm_parameters_policy" {

name = "${var.name}-ssm-parameters-policy"

role = "${aws_iam_role.lambda_role.name}"l

policy = "${data.template_file.ssm_parameters_policy_file.rendered}"

}

resource "aws_iam_role_policy_attachment" "lambda_vpc_access" {

role = "${aws_iam_role.lambda_role.name}"

policy_arn = "arn:aws:iam::aws:policy/service-role/AWSLambdaVPCAccessExecutionRole"

}

AuthN and AuthZ Lambda Layer

In order to ensure that any event our lambda receives is sent by an appropriate agent we perform authorization and authentication validation:

Authentication: Following this wiki from Slack we authenticate that the event body we receive came from Slack.

authenticated = fa.authenticate(event, SLACK_SIGNING_SECRET)

...

def authenticate(event, slack_signing_secret):

""" Authenticate the request came from Slack """

request_body = base64.b64decode(event['body']).decode('ascii') if event['isBase64Encoded'] else event['body']

timestamp = event['headers']['X-Slack-Request-Timestamp']

sig_basestring = f'v0:{timestamp}:{request_body}'.encode('utf-8')

slack_signature = event['headers']['X-Slack-Signature']

if abs(time.time() - float(timestamp)) > 60*5:

return False

hmac_key = hmac.new(slack_signing_secret, sig_basestring, hashlib.sha256)

my_signature = f'v0={hmac_key.hexdigest()}'

return hmac.compare_digest(my_signature, slack_signature)

Authorization: We wanted to make sure that we could limit who is able to run the GRAIL command and restrict where the command can be launched from. We decided to do this by creating a private channel where only certain people would have access to post. As mentioned above, the Channel ID is then stored as an SSM parameter where we validate that the command did, in fact, come from our specified channel. In addition, we log the user who initiated the command. If the command came from some other channel, then we log the user as ‘not authorized.’

authorized = fa.authorize(event, json.loads(AUTHORIZED_CHANNELS_DICT))

...

def authorize(event, authorized_channels_dict):

""" Authorize the requestor is in the authorized_users_dict """

request_body = base64.b64decode(event['body']).decode('ascii') if event['isBase64Encoded'] else event['body']

body_dict = dict(x.split("=") for x in request_body.split("&"))

user_id = body_dict['user_id']

channel_id = body_dict['channel_id']

if channel_id in authorized_channels_dict.values():

logging.warning('user_id: %s is authorized', user_id)

return True

logging.warning('user_id: %s is not an authorized_user', user_id)

return False

Lambda

At the time of this writing there are 8 main sections to our Lambda function.

Configuration json file: We make use of a json file for storing the SSM parameter-store paths that hold environment specific secrets, credentials, and parameters. This file is loaded into the Lambda which is then used to set the Global Variables mentioned below. These values are the same as what were set up in the Terraform ssm setup described above:

{

"slack_secret_ssm_loc": "/slack/ops/signingsecret",

"slack_channel_ssm_loc": "/slack/channel_id",

"jenkins_environment_ssm_loc": "/jenkins/environment",

"jenkins_protocol_ssm_loc": "/jenkins/protocol",

"jenkins_username_ssm_loc": "/jenkins/username",

"jenkins_user_token_ssm_loc": "/slack/ops/jenkinsusertoken",

"jenkins_url_ssm_loc": "/jenkins/internalurl",

"jenkins_job_path_ssm_loc": "/slack/ops/jobpath",

"jenkins_job_token_ssm_loc": "/slack/ops/jenkinsjobtoken"

}

Global Variables: By using Global Variables we only have to call out to Parameter Store once when the Lambda is loaded vs every time the Slack command is run. We use boto3 to call Parameter Store to get the values and store then for later usage:

SSM_CLIENT = boto3.client('ssm')

AUTHORIZED_CHANNELS_DICT_PARAMETER = SSM_CLIENT.get_parameter(Name=CONFIG['slack_channel_ssm_loc'], WithDecryption=True)

AUTHORIZED_CHANNELS_DICT = AUTHORIZED_CHANNELS_DICT_PARAMETER['Parameter']['Value']

Authentication and Authorization: When an event is received by the Lambda the first step is to verify AuthN and AuthZ. This is handled by our lambda layer as outlined above.

Parsing the Command: When our event is received we want to filter out the command from the rest of the metadata. We start by decoding the event and then filtering out the text portion of the command which we then turn into a dictionary for future use:

command = parse_command(event)

...

def parse_command(event):

request_body = base64.b64decode(event['body']).decode('ascii') if event['isBase64Encoded'] else event['body']

body_dict = dict(x.split("=") for x in request_body.split("&"))

return body_dict['text'].split('+')

Help: Once a command is authenticated and the user is authorized, then we allow them to start passing parameters. The first parameter that we initiated was the help command. By running /grail help we provide the user with the available command options as well as their descriptions, and finally, an example command. We also give the additional option of getting more granular help by passing arguments into the help command such as /grail help client:

if command[0] == 'help':

return give_help()

...

def give_help(command):

text = "Github Repository Automation to Increase Legerity (GRAIL)"

attachment_text = ('• Choose your service: `client`, `system`, `lambda`, `custom` n'

'• Enter your desired repository name: `new_repo_name`n'

'• Optionally pass the following flag: n'

'`--debug` will run Terraform in debug mode n'

'example: `/grail client repo_name --debug`n'

'use `/grail help {service}` for additional help')

if len(command) > 1:

required_params = ('• Required parameters: n'

'`repository_name`')

optional_params = ('• Optional parameters: n `--debug` will run Terraform in debug mode')

if command[1] == "custom":

text = "Creates a blank GitHub respository and attaches Teams with write permissions"

attachment_text = required_params + 'n' + optional_params

return {

'statusCode': 200,

'body': json.dumps({

"response_type": "in_channel",

"text": text,

"attachments": [

{

"color": "#36a64f",

"text": attachment_text

}

]

})

}

Handling the command: There are multiple commands that can be passed through to the GRAIL command; The type of repository to be created, the name of the repository to be created, and then there are a set of flags that can be passed as well.

params = handle_command(command)

Repository Type: The first parameter passed to the /grail command is to designate which type of repository is to be created. There could be any number of types of repositories, currently the code supports 3 templatized repositories and 1 blank repository. Depending on what service this first parameter is, we use a dictionary to match this value to it’s associated template repository:

template_repo = {'client': 'template-react-client',

'lambda': 'template-nodejs-lambda',

'system': 'template-lambda-system',

'custom': 'custom'}

Repository Name: The second parameter passed to the /grail command is the name that will be given to the newly created GitHub Repository.

Optional Flags: There is one optional flag that can be passed to the command.

- –debug: Runs Terraform in Debug mode

Return statement: We then return a json mapping of parameters and values for passing to the downstream Jenkins job

def handle_command(command):

""" Handle the command """

logging.warning('command: %s', command)

template_repo = {'client': 'template-client',

'lambda': 'template-lambda',

'system': 'template-system',

'custom': 'custom'}

debug = False

if '--debug' in command:

debug = True

if command[0] in template_repo:

return {

'TEMPLATE_REPO': template_repo[command[0]],

'ENVIRONMENT': JENKINS_ENVIRONMENT,

'REPO_NAME': command[1],

'Debug': debug

}

return {

'status': f'failure: {command[0]} is not a recognized service. Please run `/grail help` for a list of options'

}

Calling Jenkins: Now that we have all our parameters set we form them into a string and attach them to the URL. When we send the payload over to Jenkins the parameters will be handled natively.

success = call_jenkins(URL, params)

...

def call_jenkins(url, params):

""" Call Jenkins """

for param in params:

formatted_param = f'&{param}={params[param]}'

url += formatted_param

try:

response = HTTP.request('POST', url)

logging.warning('response: %s', {str(response.status)})

return response.status in [200, 201]

except Exception as e:

logging.error(e)

return False

Responding to Slack: If a 200 or 201 is received back from Jenkins then the Lambda will respond back to Slack with the parameter payload that was sent to Jenkins.

if success:

return {

'statusCode': 200,

'body': json.dumps(f'Building Service: {params}')

}

return {

'statusCode': 200,

'body': json.dumps('Unexpected Error Occurred.')

}

Jenkins Pipeline:

The fourth, and final, component is a Jenkins Pipeline file which will handle the Terraform plan and apply steps to run the Terraform and create the required repositories.

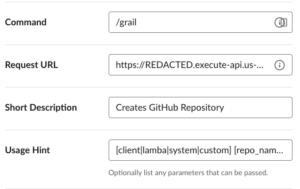

Slack

Finally, using the Slack GUI we create a slash command and point it to the API Gateway that sits in front of our GRAIL Lambda:

In our current setup we have 2 Slack apps; Ops-Development and Ops-Production.

Ops-Development

Ops-Development is the Slack application that we use for testing any changes prior to deploying to Production. Ops-Development points to an API-Gateway which exists in our development AWS account, is restricted to a private Slack channel, and triggers jobs in our Dev-Jenkins environment. When running Terraform in our Dev-Jenkins environment we terminate after the Terraform Plan stage in order to not create any actual GitHub repositories.

Here is an example of running a /grail-dev command and the response that is received from Jenkins.

Ops-Production

Ops-Production is our production Slack application. Ops-Production points to our production API-Gateway in our production AWS account and triggers jobs in our Prod-Jenkins environment. Running this command is currently restricted to a public Slack channel that has restrictions on who can post in the channel. For transparency, we send Slack notifications back to the public channel when the Jenkins’ Terraform jobs are triggered, as well as the jobs’ result.

Next Steps

We have several improvements still planned for this project:

- Move the management of the Global Variables out of our Lambda and into a separate layer.

- Add a subcommand for creating a new Template Repository.

- Add ability to search GitHub for a list of all Template Repositories

- Clone a Templatized Repo based on the repository name, thereby eliminating the need for the template_repo dictionary in the /handle_command() function

Back to Blog