Blog -

Alert/Alarm Fatigue – Tired of Being Tired of SIEM Alerts

In our ever-evolving detection-as-code (DaC) series, we’ve covered Putting Theory into Practice, Integrating SIEM with CI/CD, and SIEM CI/CD challenges. But today, we’ll be shifting focus to a major problem in any Security Operations Center (SOC): Automation against Alert Fatigue. Any SOC analyst knows the number of alerts that come in during their shift can get overwhelming, and deciding what alerts to prioritize over others can sometimes be a difficult task. Queue “Alert Fatigue” – desensitization to alerts due to an immense amount getting triggered. Every SOC endures alert fatigue, and it’s an ever-evolving battle to fight it off. From tuning detections to creating automations, there are many ways to combat it. Still, most recently, we’ve added automation to our ticketing processes to decrease the amount of time it takes to document and close an alert. Here’s a look at our most recent skirmish with alert fatigue.

In a Minute

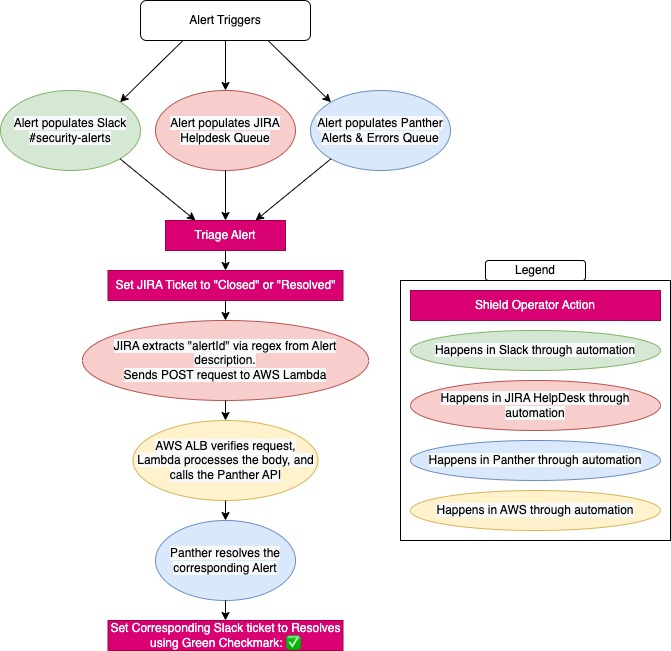

At FloQast, when a security rule gets triggered in our Security Incident Event Manager (SIEM) and an alert fires off, it gets pushed into three main queues – Slack, Jira, and Panther. We use Slack as our initial notification queue. Jira is our primary documentation source for alerts, giving us a quick and easy way to search back in time for audits, investigations, and other tickets (via JQL). Lastly, Panther (our SIEM) is used to view the event logs and get a more holistic view of not only why the alert fired off, but the context around that event. This means that our analysts would need to watch for alerts in Slack, open the corresponding alert in Jira, open up Panther to view the associated logs, navigate back to Jira to document, and then close the alert in each platform after triage – FOR EVERY. SINGLE. ALERT. This was not only redundant and obnoxious, but could also lead to discrepancies between the three sources caused by alert fatigue. This could have major business impacts. There is a higher possibility for human error, where an analyst could forget to close an alert in one of the three channels after triaging many alerts all day. And not only that, it’s also taking invaluable time away that could be spent working on another project.

Fight the Fatigue

With 30+ alerts are coming in a day, it was evident to our team that our alert triage process was inefficient and needed an upgrade. Who has time to keep performing the same task in three different places? On top of that, which alert queue would we be using as our source of truth? To combat our problems, we turned to Jira Automations. The idea behind this automation flow would be that when an Alert is set to Closed in Jira, the corresponding alert would then be set to Resolved in Panther. So, how did we pull this off?

Jira Automations – When an analyst transitions an Alert to Closed, our automation flow kicks off and extracts the “AlertID” from the ticket description. A POST request is then created and sent to our API hosted on AWS.

AWS ALB – An Application Load Balancer (ALB) has been configured to be invokable via API. This endpoint will receive the POST request from Jira, authenticate the request, and pass the body of the request to our Lambda.

AWS Lambda – Once the Lambda is passed the event data, it will extract the AlertID variable and format it in a GQL query. This query is passed to the Panther API which sets the corresponding alert to Resolved.

Panther API – The Panther SIEM has a GraphQL endpoint that accepts a GQL query as input. The Lambda will pass a mutation query to the endpoint to edit the Alert’s status by ID.

** Authentication– We use a couple of tokens to authenticate our requests that are stored securely in AWS SSM. Our first token is passed from Jira to our Lambda, ensuring our request comes from our own Jira instance. We also verify the IP that the request was sent from. The second token is used to authenticate the call to the Panther API from our Lambda. This ensures safe delivery of our data.

Top Priority

Our new automation has been deployed in our environment for about 6 months now, and we’ve noticed that team members can now take on more quarterly tasks due to the freed-up time. We’ve noticed not only an increase in projects being taken on, but also an increase in complexity as well. With this new automation, we now use Jira as our source of documentation, and Slack as our status-tracking queue. Panther still serves as our place to investigate events, but their Alert Queue does not need to be updated for every ticket since Jira takes care of that now. Combined with our continuous process of fine-tuning our rules and detections, alert fatigue for the team is at an all-time low. We’re going to continue to make additions and efforts to our event triaging processes to keep fighting off alert fatigue.

Not Finished

Although we’ve automated some of our processes, we are far from finished! So what’s next for our Corporate Security team? We’re looking to continue to improve our alerting, ticketing, and triaging processes by creating more automations! Our improvement process is an iterative effort, and we are looking to SOAR (😉) into the new year…

Back to Blog