Blog -

Increasing Predictability for Software Estimations

In any software engineering department, a common question will be, “Is it technically feasible to [do this thing]?” The follow up question then becomes, “Well, how long will that take?”

Previously, I had written about how FloQast uses relative sizing for epics to understand a team’s roadmap. Unfortunately, t-shirt sizing doesn’t deliver an exact date so dates can be in the eye of the beholder, causing confusion. Then in addition, what happens if those dates are wrong? And why did they go wrong?

Taking a Drive

If I were to drive down the street where I live, it would take about 1 minute. If I were to drive from west Los Angeles to east Los Angeles, it may take an hour. Maybe more, maybe less – it depends.

Now what if I had to drive from Los Angeles to New York, and on the way the car broke down and I had to change the car’s transmission (tech debt refactor), make a stop in Arizona to add more items to the trunk of the car (scope changes), switch to another driver in Kansas (personnel changes), and pick up my friend the moment she finishes her dinner in Pennsylvania (cross-org collaboration)?

It would take a lot longer than I originally thought.

Estimation Confidence

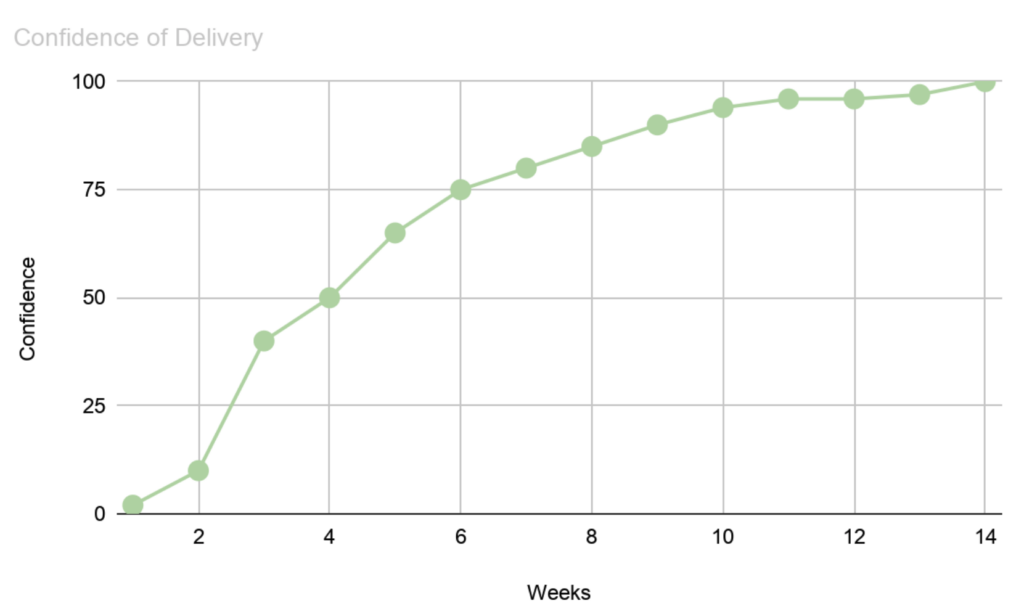

It’s safe to say that confidence about whether an epic will complete is higher near the end than at the beginning. It’s reminiscent of an asymptote; except instead of never reaching zero, confidence never reaches 100 until completion.

If I were to visualize that for an epic that takes about a quarter, it could look something like this:

On Day 1, confidence is zero. By the time we get closer to the end, the confidence score (a made up metric for the sake of illustrating this point) gets higher and higher.

Predictability

One of the things we’re working on in FloQast Engineering is seeing how we can refine our predictions to make them better, and for teams to be more predictable.

What is predictability? We like this definition from Simon Noone, which is:

“Predictability is delivering something when you say you are going to.”

The value of teams to be better at predictability is two-fold: team-level ownership of timelines, and business-level visibility into upcoming features.

To step back though, what causes time estimations to change? Usually it falls into one of the following:

- Scope changes

- Either on a product or engineering level, we end up surprised with something that we didn’t account for earlier. This can be customer feedback on a new feature that adds more to do, a part of the code that was in bad shape, or something else.

- Team Health

- Issues with people, process, or tech. This can be a team member who isn’t on the team anymore, a slowdown in our release queue, a shared dependency issue, or something else.

Based on this knowledge, it made sense to find a way to apply this into iterative feedback loops about the team for themselves and for stakeholders.

Team Health Checks

A team health check is a self-assessment tool that teams can run in their Agile ceremonies to get a sense of how work is trending. Whereas a retro focuses on what went well and what can be improved, a team health check is more focused on specific aspects and asks the team to look at themselves in those facets. Think of it as going to the doctor where they check weight, blood pressure, and temperature. If there’s a fever, it’s an indicator of something to look into.

Atlassian provides a team health check template that has some great axes to take a snapshot in time of a given project. If one of the problems to solve is understanding the why of a project at snapshots – this template felt like the right tool for the problem we were looking to solve.

Just like we use Redux for the state of our frontend, team health checks capture the state of our teams during epics.

Asymptotic Estimation

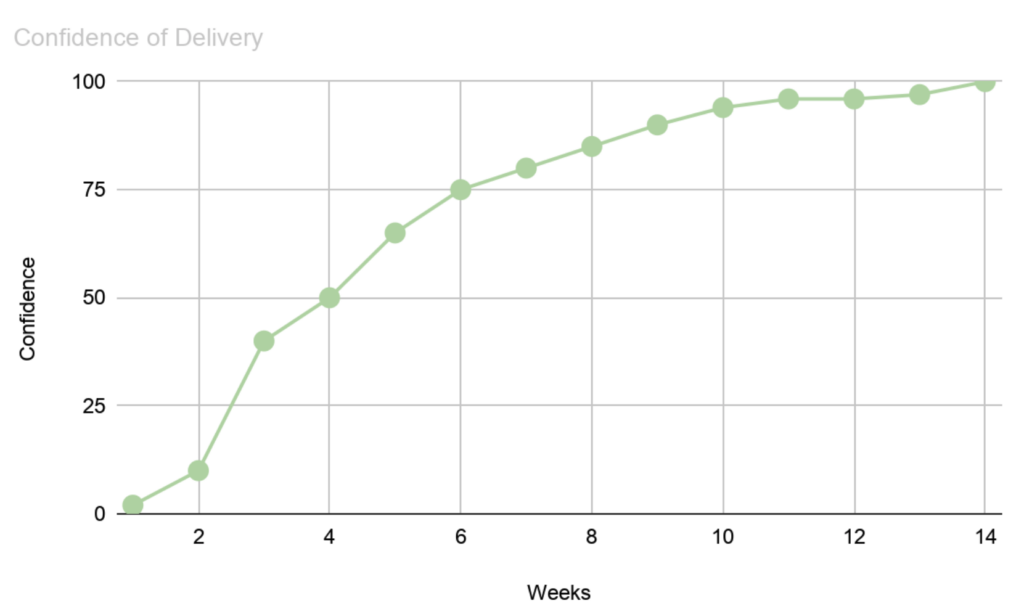

Using the team health checks as a snapshot in time, we layer in incremental team-level time estimations. To use the previous graphic:

- Checkpoint 1 – Product and Engineering Discovery

- Team health check and estimate

- Checkpoint 2 – Engineering Requirements Document

- Team health check and estimate

- Checkpoint 3 – ~50% complete

- Team health check and estimate

- Checkpoint 4 – ~75% complete

- Team health check and estimate

- Checkpoint 5 – ~90% complete

- Team health check and estimate

- Checkpoint 6 – ~95% complete

- Team health check and estimate

At each interval, the team gives a gut check of when they think the epic will complete. In checkpoints 1-3 it may be in the months’ estimate, checkpoints 4-5 it may be in the weeks’ estimate, and by the end, in the days’ estimate. If, for example, there’s a marketing push that needs to occur as a new feature is getting released – information from Checkpoints 4-6 can be helpful in keeping track of release timings.

Also at each checkpoint, the team decides one area that’s red or yellow to iteratively improve and move towards green.

Estimations With Context

At the end of the epic, we should have a fully filled out chart that looks similar to the below, and the estimates that the team gave at that time.

What happens if an epic just isn’t this big and doesn’t need this overhead for something that may take a couple of weeks? Then great! This may be too much cost for the benefit. But when we have larger epics – this should give all parties an iterative feedback loop, and a retrospective document before starting the next epic.

Measuring Predictability

If predictability is delivering something when you say you’re going to, how could we measure this? The way we’re currently looking at this is – how often does a team change its end date for an epic? But there’s a caveat: if at Checkpoint 6, the estimation goes from next Wednesday-ish to Thursday at 1pm, that’s a good thing. It’s actually an even more precise timing. If the estimation goes from next Wednesday-ish to next year – not so great.

So really, what we’re looking is: how often a team changes its epic end date by x

That is to say, it’s not purely that the date changed – but it’s that the date changed by some standard deviation more or less than before.

As we’re just experimenting with this process, we’ll build a standard deviation for each team, by creating a baseline over 3 epics. Once we have that baseline, then we can continue to iteratively improve.

The Why With the When

Getting estimations right for a large epic is challenging. By adding team health checks into the mix, what we’re looking to do is add the “why” in addition to the “when” of epic timelines, with the goal of making our teams more predictable over time.

Back to Blog