Blog -

Incident Management Through the Years at FloQast

I have been lucky enough to see our incident management process evolve at FloQast over the years.

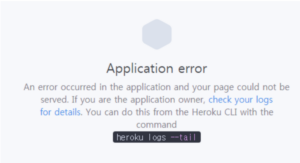

When I began working here as a software engineer in 2016, I think it was only a matter of days before an incident occurred. Back then, an incident might have started with a user emailing us a screenshot of an infamous error message:

At that point, our ‘incident management process’ kicked off. I could be wrong, but I think these were the formal steps

- Our COO told the CTO sitting at the desk next to him that the app was down.

- Our CTO raced to log into the Heroku console and either restart a server or provision a new dyno.

For some context, at that point in time, our company had around 15-20 total employees. That included an R&D department headcount of 6, including 0 QE Engineers and 0 DevOps Engineers. Despite the lack of headcount, it was a much simpler time for our incident management process. Our entire application was a relatively simple “monolith” and all of our code was in a single repository. As a result, there was rarely a question of what was broken or the location of the code that needed to be fixed. It was really just a matter of who was going to get assigned to address the issue. It’s also worth mentioning that our entire team was sitting right next to each other in the same room, so communication was a non-challenge.

Incident Management in 2021

A Bigger Team With Bigger Problems

Fast forward to today. Our department looks a little bit different:

- Our R&D headcount is over 80.

- We have QE Engineers, DevOps Engineers, Security Engineers, Product Managers, and Product Support Reps. We used to have none of these!!!

- None of us are sitting next to each other. We are all working remotely.

- We have a lot more product that can (and does) break

- For what it’s worth, Heroku is a distant memory, and it’s safe to say we are well past the days of having all code in one repo.

As the team grew from 6 people to 80, we developed a more formal incident management process. There was not one specific day where everything changed. Some components of our process developed naturally as we added new people in new roles, whereas other components required very intentional change and practice. Don’t worry though, things still break, and with that comes plenty of opportunities to improve our incident management process.

A Sample Incident Today

Today, an incident might go something like this:

- A handful of customers report a similar defect in our application.

- A “Product Support Representative” (i.e. client-facing support) is concerned. This looks like a new problem and a serious one at that.

- The Product Support Representative posts a message (probably with some screenshots) in our #engineering-support Slack channel. Our company specifically uses this channel for escalating defects to our engineering and product teams.

- An engineer reviews the Slack post and agrees that the issue is concerning. A Product Manager or Engineering Manager agrees that the issue is severe enough to warrant going through our incident management process.

- An “Incident Controller” (aka the “IC,” typically an Engineering Manager) takes ownership of the incident. The IC creates a Slack channel to track the incident and invites all relevant parties to join.

- The IC is not tasked with actually fixing the problem, but instead leads up all communication and provides regular updates in the channel until the issue gets resolved.

- Last, after the incident concludes, the IC schedules and leads a post-mortem, ideally within a few days of the incident ending.

This process does not always follow these exact steps, but a minimum, we like to see the following:

- Someone should take ownership of the incident (i.e. the Incident Controller)

- There should be one place to consolidate communication (today we rely heavily on Slack)

- Regular updates should be provided until the incident is resolved

- A post-mortem should be held following the conclusion of the incident

Challenges With Our Incident Management Process

I think three challenges stand out with our current process:

- Determining whether there is, in fact, an “incident”

- Getting the right people involved

- Getting the most out of a post-mortem

I will go into a bit more detail on each of these challenges and how we try to overcome them.

Challenge #1: Determining Whether There Is in Fact an “Incident”

We do not like making a habit out of interrupting our engineers. All of our engineering teams follow an Agile development methodology. Incidents can destroy sprint goals and team momentum. Multiple incidents in a short timeframe can cause team burnout. Ideally, we avoid triggering our incident management process for a low-priority defect.

We found it helpful to create a clear definition for an incident. At this point, we have specifically defined an engineering incident as “Anything that interrupts our normal business process.” In other words, our systems are in a failure state that is preventing our clients from using a critical part of our application. The reality is that this still sometimes involves judgment. We always, at a minimum, want to consider the following factors before we open an incident:

- How widespread is the issue?

- How severe is the issue?

Unfortunately, we have found that no matter how specific our definition of an incident may be, we will still end up in situations where we are uncertain if it’s worth treating a defect as an incident. In these types of situations, there is a quick check-in between the Product Manager, Engineering Manager, and Tech Lead of the team that owns the defect. If not everyone is available to meet, then those that are available can make the final decision. At this point, we like to err on the side of caution, and so when there is uncertainty on severity, we prefer to treat a defect as an incident, even if it means taking down a sprint.

Challenge #2: Getting the Right People Involved

Why Is This Difficult?

Sometimes this just isn’t straightforward. For some context, our company has many products that are essentially embedded into a single web application. As much as we would like these products to be truly independent of one another, our customers — not surprisingly — gain quite a bit of value from our products being able to interact with one another. Most of the time, incidents are clearly isolated to one part of our application. Offhand, I can think of at least two types of incidents where getting the right people involved is non-trivial:

- Errors in parts of our application UI that are shared by more than one of our products (and engineering teams)

- Performance-related issues (e.g. users reporting the app is slow to load). These could stem from application code defects owned by our software engineers or infrastructure problems owned by our DevOps team.

How Do We Solve This?

As much as we prefer not to interrupt our engineers, it’s important to figure out the proper owners of the incident sooner rather than later. The last thing we want is for one team to spend a day investigating a problem only to find out it relates to code owned by an entirely different team. In the short term, to help mitigate this problem, we have a couple of procedures in place to make sure the right people are involved in each incident:

- We use a Slack channel I mentioned above (#engineering-support) where our Product Support team can escalate concerning issues observed in our application. This channel is, thankfully, not very noisy today, and so when something ambiguous comes up, engineers from different teams can work together to figure out which team is generally responsible.

- Once we actually open an official incident, we create a separate Slack channel (e.g. #incident-41-slow-app-load-times) and send out an announcement in our main R&D Slack channel that a new incident is underway with a link to the new incident Slack channel. This way, no one gets inadvertently excluded and there are no “hidden incidents.”

Thinking more long term, it’s also important for us to ask ourselves why the team responsibility wasn’t obvious in the first place. When it comes time for an incident post-mortem, we will usually have an action item to improve our system monitoring/error messaging so that the underlying issue is more obvious the next time around.

Challenge #3: Getting the Most Out of a Post-Mortem

For those unfamiliar, an incident post-mortem is a meeting that a team holds after an incident concludes. During the meeting, the team reflects on the root cause of the incident and determines whether they need to take any actions going forward.

It should go without saying that incidents are stressful. They bring with them unexpected, yet urgent, work. As mentioned above, incidents also mess with sprint goals and team momentum. Sometimes the last thing everyone wants is another meeting. Given the tremendous potential benefits of a post-mortem, it’s important to do everything you can to set up the meeting for success. We have found the following practices improve the quality of our post-mortems:

- We conduct our post-mortems as soon as possible after the conclusion of an incident so that the context is fresh. If the current sprint is doomed, so be it. The team should simply adjust their sprint goal.

- At the beginning of our post-mortems, the Incident Controller will review the timeline of events during the incident. Sometimes, we spend so much time thinking about how to resolve the incident that we forget how it surfaced in the first place. Reviewing the timeline is a great way to have the whole team reflect on the order of events that took place. This allows everyone to think more critically about the root cause of the incident.

- Create action items during the meeting. Usually, this amounts to us creating JIRA tickets near the top of our team’s backlog. If you don’t create action items during the post-mortem, you probably never will.

- Show gratitude. You should be proud that the team was able to work together to solve a problem. Even if the incident itself negatively impacted the company, it’s important to view the incident response as a success, not a failure.

Thanks for Reading!

I hope you enjoyed this look behind the scenes of our incident management process. We have come a long way over the years, and we certainly have much to improve upon going forward. Here’s to breaking things!

Back to Blog